|

Software Engineer at Google Research India. B.Tech from Indian Institute of Technology, Delhi, advised by Dr. Mausam and Dr. Parag Singla. Previously, an intern at MILA |

|

|

Research |

|

|

One of O(100) core contributors listed in the technical report Gemini v1.0 Technical Report, 2023 |

|

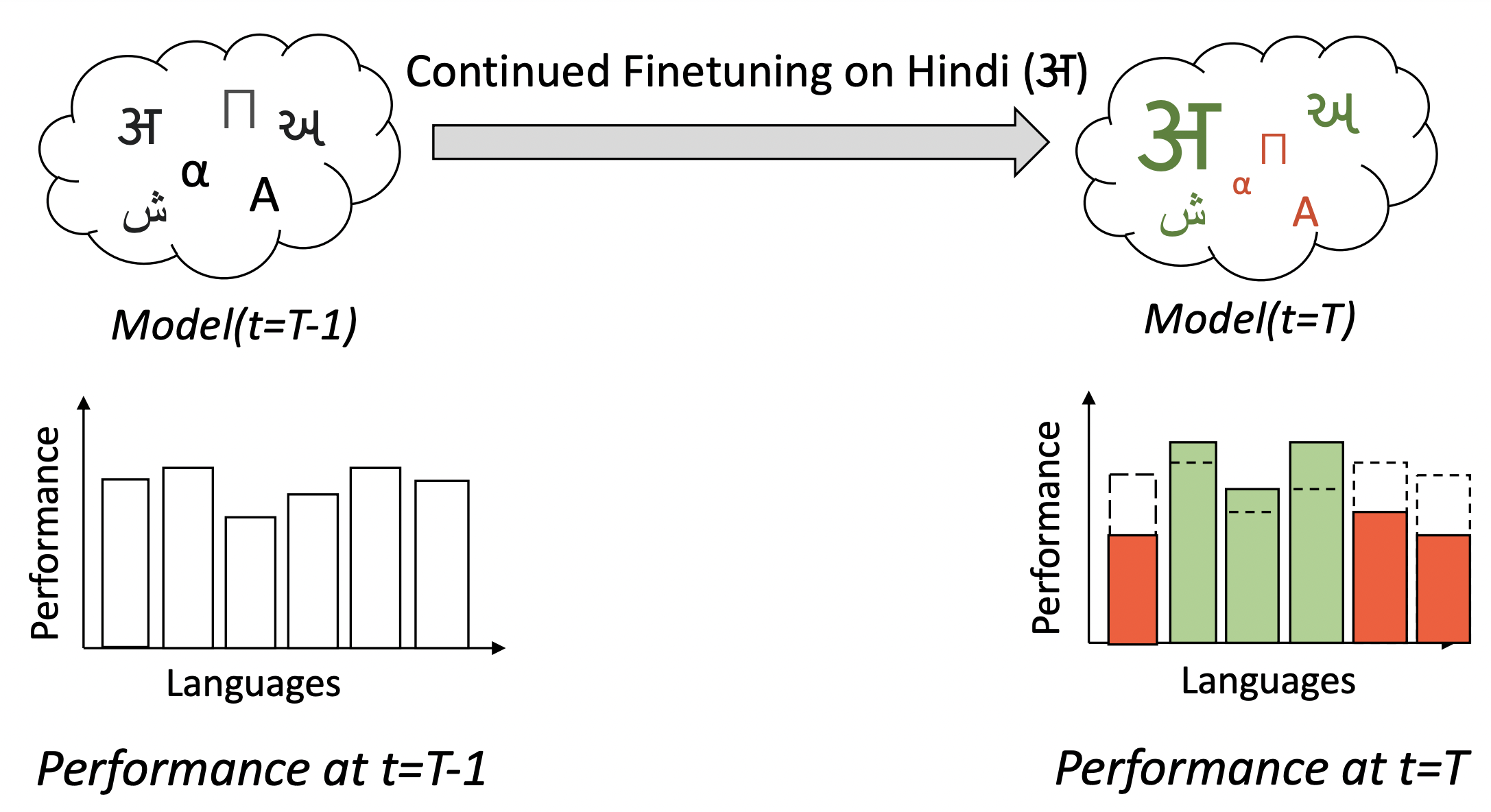

Kartikeya Badola, Shachi Dave, Partha Talukdar ACL (Findings) & RepL4NLP Workshop, 2023 A study on continual learning from a multilingual perspective. Proposes finetuning pipelines using parameter-efficient finetuning methods to minimize language-specific catastrophic forgetting while encouraging positive cross-lingual transfer in this setup. |

|

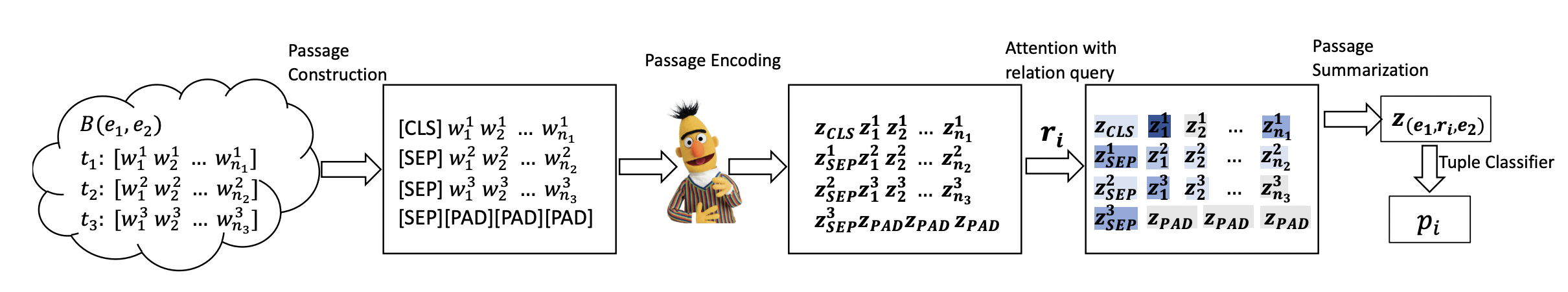

Vipul Rathore*, Kartikeya Badola*, Parag Singla, Mausam ACL (Main), 2022 A simple BERT based model for multi-instance multi-label setting of distantly supervsied relation extraction (DS-RE). Outperforms exsisting state-of-the-art models on monolingual and multilingual DS-RE datasets. |

|

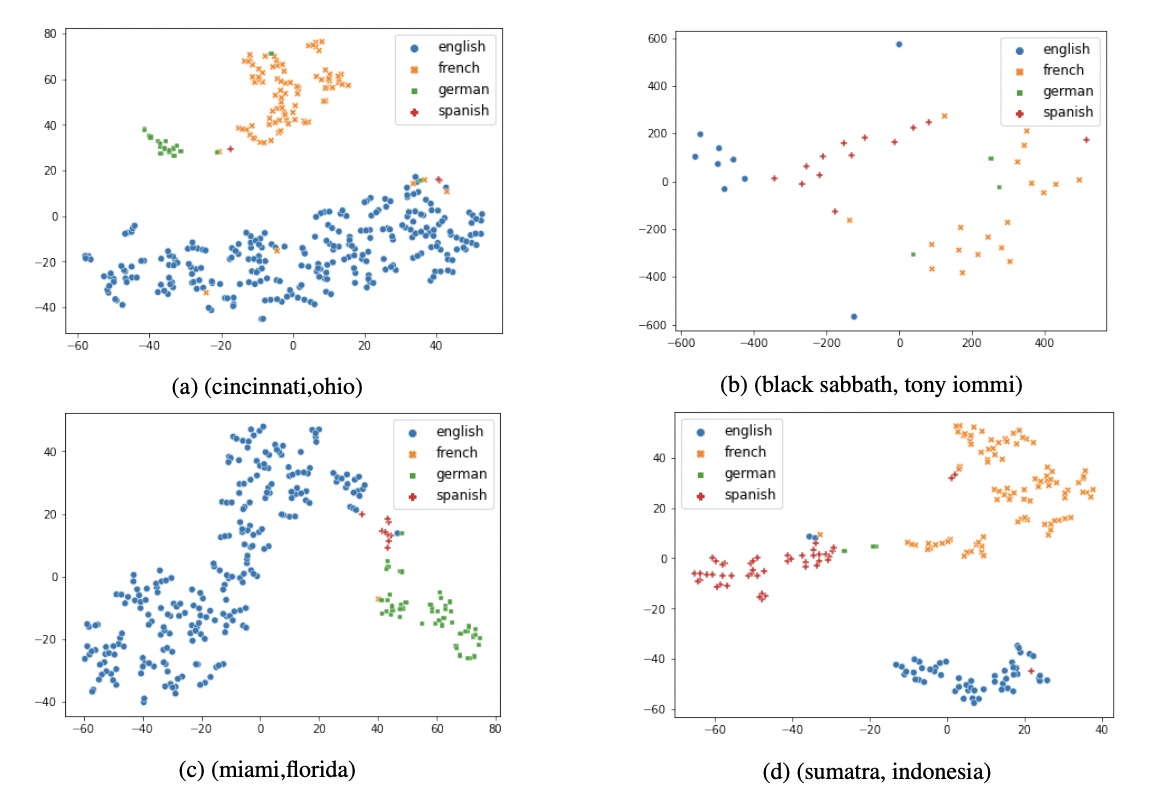

Abhyuday Bhartiya*, Kartikeya Badola*, Mausam ACL (Main), 2022 A high quality dataset for multilingual distantly supervised relation extraction (DS-RE) along with the first baseline results for the task. |

|

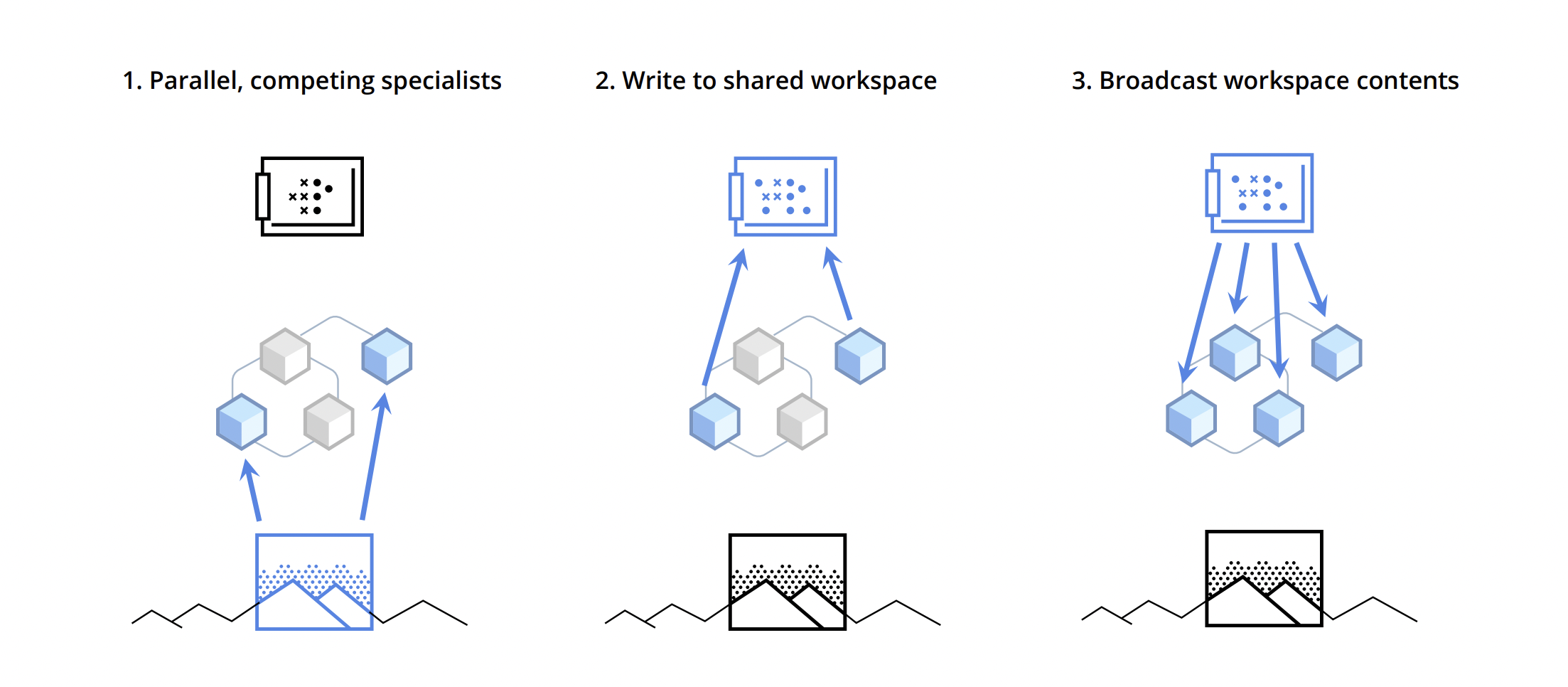

Anirudh Goyal, Aniket Didolkar, Alex Lamb, Kartikeya Badola, Nan Rosemary Ke, Nasim Rahaman, Jonathan Binas, Charles Blundell, Michael Mozer, Yoshua Bengio ICLR (Oral), 2022 Use of a global channel with an inherent bottleneck to model communication between specialist modules in a modular neural network (as opposed to all-pair attention update). Achieves better generalization across tasks from various domains. |

|

Service |

|

| Reviewer for JAIR 2023 |

| Reviewer for NLP for Conversational AI 2023 |

| Reviewer for ACL Rolling Review (ARR) 2022 |

| TA for the course on NLP (Dr. Mausam, Fall 2021) |